Changelog

Salvus version 2024.1.2

Released: 2024-10-14

This is a minor release with several bug fixes and performance improvements.

New features include utilities for switching the material parameterization and

extracting spatial gradients of a model, improved support for meshes stored in

the ExodusII file format, the ability to add new sites on the fly, a callback

option for post-processing model updates in an inversion, and experimental

support for importing sources on a fault using the Standard Rupture Format

(SRF).

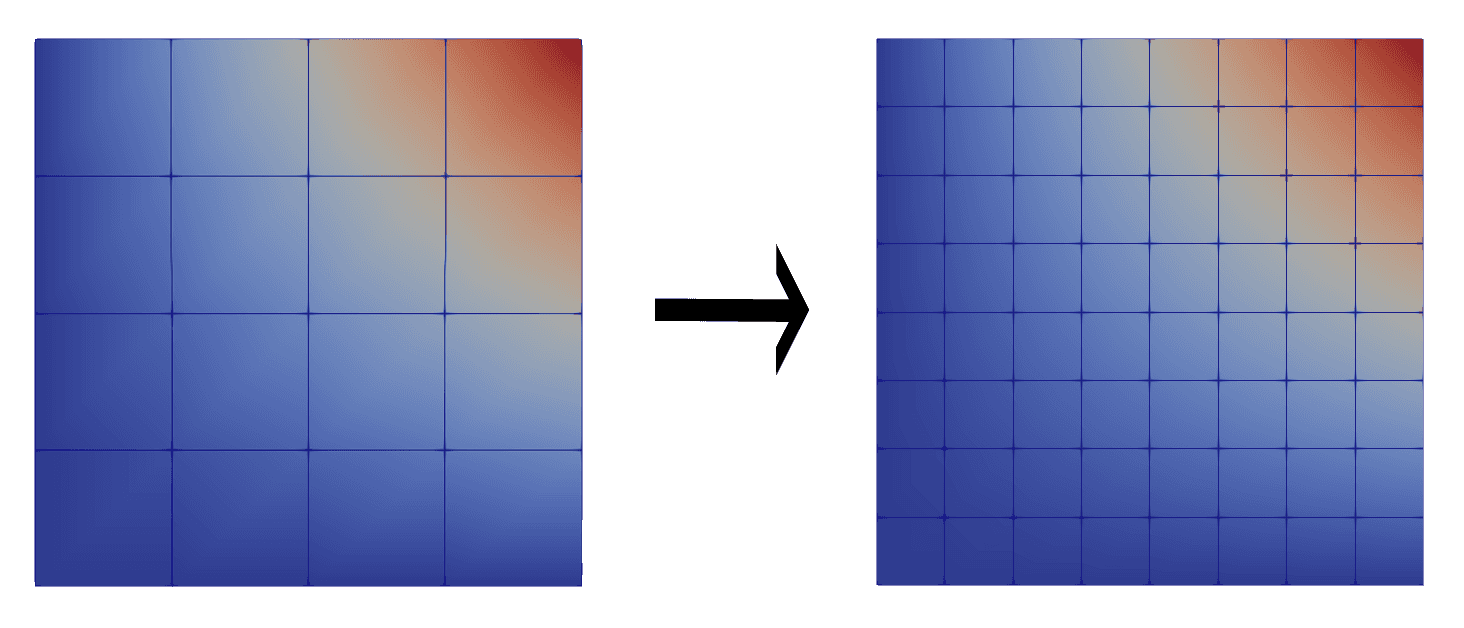

The performance improvements provide significant speed-ups for interpolating

volumetric models and local mesh refinements.

Further details are listed below.

SalvusFlow

Salvus on local sites typically fully decouples processes (e.g. running

computations) from its parent process to allow for async workflows. In some

situations this is not desirable (e.g. one wants to ensure that all Salvus

runs are terminated when the Python process is terminated). An optional

"do_not_decouple_child_processes" flag for local sites has been introduced to

support that behavior.SalvusFlow

Added the possibility to add new executors/sites on-the-fly, skipping all the

checks from the

salvus-cli init-site step.Usage as a context manager is optional.

with sn.functions.add_new_executor( site_name="my_other_site", executor_type="local", salvus_binary=salvus_binary, run_directory=run_directory_2, tmp_directory=tmp_directory_2, use_cuda_capable_gpus=False, default_ranks=1, max_ranks=2, ): # Site is available here. sn.functions.get_site("my_other_site")

SalvusFlow

Filter out the warning regarding cryptography's moved import, as with the

current environment as distributed, this doesn't affect Salvus users.

SalvusFlow

Fix for misfit computation when time axis is improperly subsampled. Happens

i.e. when the last sample on a receiver (the last simulation time step) doesn't

align with regular time grid. This occurs when using a sampling interval

greater than one time step in the receiver output.

SalvusMaterial

Fix bug where attenuating tensor components are not split in base and visco

parts when computing wavelengths.

SalvusMesh

Add functions to compute spatial derivatives of arbitrary fields

defined at the GLL points on an unstructured mesh using the

Lagrangian basis.

import salvus.namespace as sn from salvus.mesh import layered_meshing as lm n = 4 # polynomial order domain = sn.domain.dim2.BoxDomain(x0=0, x1=1, y0=0, y1=1) # Create a simple mesh to use as a test. Let parameters vary only in a # single direction so that we know their gradients in advance. mesh = lm.mesh_from_domain( domain=domain, model=lm.material.from_params( vp=1 + sympy.symbols("x"), rho=sympy.symbols("v") ), mesh_resolution=sn.MeshResolution( reference_frequency=10.0, elements_per_wavelength=1.0, model_order=n, ), ) # Compute the spatial derivatives of the model mesh.compute_spatial_gradients(fields=["VP", "RHO"]) # VP varies only in the x direction. np.testing.assert_allclose(mesh.element_nodal_fields["dVP/dx"], 1.0) np.testing.assert_allclose( mesh.element_nodal_fields["dVP/dy"], 0.0, atol=1e-10 ) # RHO varies only in the y direction. np.testing.assert_allclose( mesh.element_nodal_fields["dRHO/dx"], 0.0, atol=1e-10 ) np.testing.assert_allclose( mesh.element_nodal_fields["dRHO/dy"], 1.0, )

SalvusMesh

When interpolating xarray datasets using

get_interpolator(...) from

xarray_tools, faster interpolations are now provided via the fast_interp

package. This package will be used automatically if the interpolation source is

regularly spaced, 2- or 3-D, and the "linear" interpolation method is selected.

Note that if the source grid has a variable grid spacing in any one dimension

scipy's regular grid interpolation routines will be fallen back on.SalvusMesh

Adds a new argument

enclosing_elements_method to the simple_post_refinements

generator. The default value is "inverse_coordinate_transform", which retains

legacy behavior. Another value "bounding_box" is also supported, which trades

the accuracy of the enclosing element search to performance, as only a simple

bounding box check is done to determine point ownership.SalvusMesh

Fix a bug that erroneously tried to truncate discrete relative models when two

neighboring interfaces had coincident reference elevations.

SalvusMesh

Fix a bug in the mesher where certain non-monotonic models would cause an error.

SalvusMesh

Salvus can now read Exodus files with multiple element blocks, read them

partially and optionally store the element block index in the mesh.

# To attach the block ids to the mesh m = sn.UnstructuredMesh.from_exodus( filename, attach_element_block_indices=True ) # To only read given blocks m = sn.UnstructuredMesh.from_exodus( filename, select_element_block_indices=[2, 4], )

SalvusMesh

Allow conversion of unstructured mesh parameters using

mesh.transform_parameter, which will automatically try to convert the

parameters defined on a mesh to your chosen material, if they are compatible.

Returns a new mesh. Ideal when a model in a different parametrization is

desired.When creating meshes from materials, typically the converted mesh will be

identical to a mesh generated from the converted material itself.

import salvus.namespace as sn from salvus import material d = sn.domain.dim2.BoxDomain(x0 =0, x1=10, y0=0,y1=10) mr = sn.MeshResolution(reference_frequency=1) mesh_material_iso = sn.layered_meshing.mesh_from_domain( domain=d, model=material.acoustic.Velocity.from_params(vp=1.0, rho=1.0), mesh_resolution=mr, ) mesh_material_tho = sn.layered_meshing.mesh_from_domain( domain=d, model=material.acoustic.elliptical_hexagonal.Velocity.from_params( vpv=1.0, vph=1.0, rho=1.0 ), mesh_resolution=mr, ) converted_mesh = mesh_material_iso.transform_material( material.acoustic.elliptical_hexagonal.Velocity ) assert converted_mesh == mesh_material_tho

SalvusMesh

An elastic version of the Thomsen parameters material

(

material.elastic.hexagonal.Thomsen) is added, which has

from_acoustic which needs injection of just 2 extra parameters.

Additionally, it can convert back using to_acoustic.SalvusProject

Fix a bug where linear solids would not be properly passed to viscoelastic

cartesian models in SalvusProject resulting in an error during mesh

generation.

Changes to Experimental Features in 2024.1.2

SalvusFlow

Add a reader for specifying seismic ruptures in the Standard Rupture Format

Version 2.0. This is useful for modeling a rupture along a fault by generating

a collection of point sources in Salvus.

Example:

from salvus.flow.simple_config.source.srf_file_reader import _read_srf_file srf_data = _read_srf_file( "example.srf", plot=True, )

This is an experimental feature and the API might change in the future.

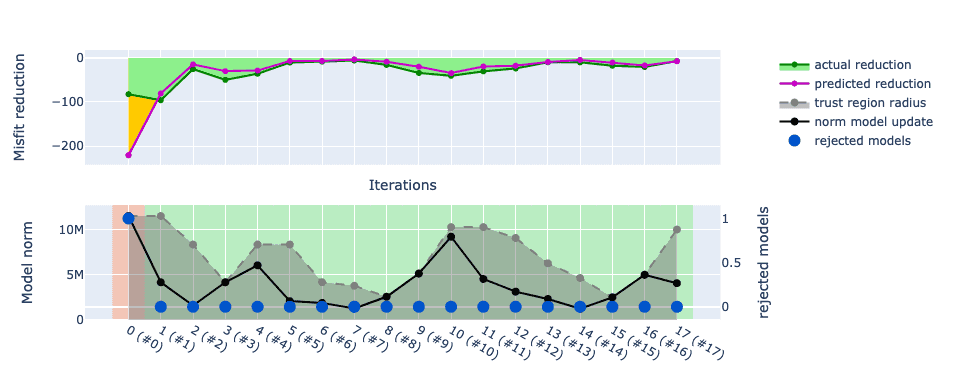

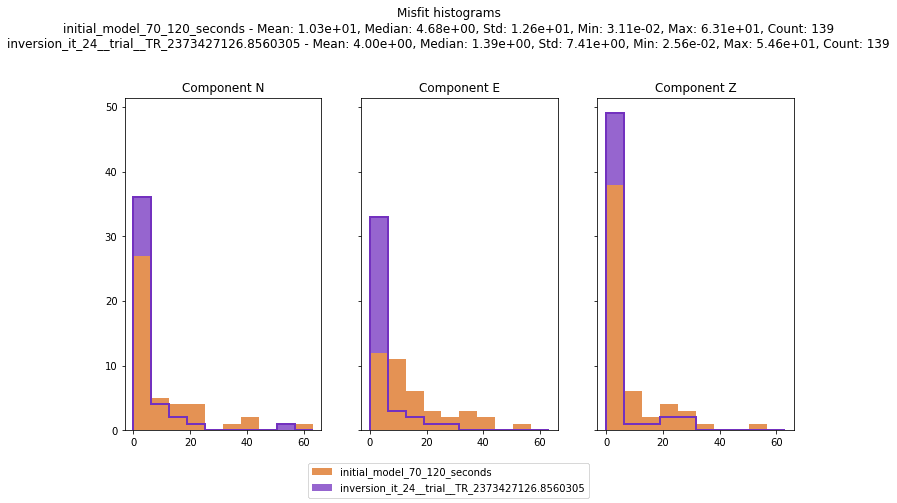

SalvusOpt

Add a callback function to the Mapping class which allows to postprocess

proposed model updates before validation of the misfit reduction. This feature

can be useful to introduce prior knowledge on material properties and to

emphasize certain features in the model updates.

Example:

def fixed_vp_vs_ratio( proposed_model: sn.UnstructuredMesh, prior_model: sn.UnstructuredMesh, ) -> sn.UnstructuredMesh: vp_vs_ratio = ... # some value model_update = proposed_model.copy() model_update.elemental_fields["VP"] = vp_vs_ratio * model_update.elemental_fields["VS"] return model_update mapping = sn.Mapping( inversion_parameters=["VS"], scaling="relative_deviation_from_prior", postprocess_model_update=fixed_vp_vs_ratio, )

mapping can then be passed to an InverseProblemConfiguration to enforce a

fixed VP/VS ratio without inverting for VP.Salvus version 2024.1.1

Released: 2024-07-02

This is the first minor update to the 2024.1 release.

Notable changes include the handling of material parameterizations in Salvus

and a new way of parallelism for simulations on shared filesystems (e.g. local

sites or workstations). We have also made it a lot easier to add external data

to a project.

SalvusFlow

Updated the Salvus Jupyter notebook snippets collection now works with Jupyter

notebooks >= 7 as well as JupyterLab.

%load_ext salvus %salvus_snippets

SalvusFlow

Added new functionality to facilitate adding external data

to a Salvus project per event. The function

salvus.data.external_data_to_hdf5 takes an

xarray.Dataset, validates it, and converts it to a Salvus

compatible HDF5 file, which can be added to a project.from salvus.data import external_data_to_hdf5 for event in project.events.list(): external_data_to_hdf5( data=data_per_event, receivers=event.receivers, receiver_field="displacement", output_filename=external_data_path, ) project.waveforms.add_external( data_name="observed_data", event=event.event_name, data_filename=external_data_path, )

SalvusMesh

The internal BM file writer in SalvusMesh previously did not check for invalid

interface definitions, and may have written BM files which contain a

triplication (or greater) of coordinate / value pairs at an interface. This has

now been remedied, and BM files generated via

get_bm_string should now contain

only duplicated pairs at most. This issue may have affected those generating

background models in SalvusProject from an n-dimensional xarray dataset.SalvusMesh

The material submodule of the layered_mesher has been promoted to a

full-fledged top level module for Salvus. Although it is still available as an

alias in the layered mesher submodule of salvus.mesh (i.e.

salvus.mesh.layered_mesher.material), accessing it this way is not

encouraged.SalvusMesh

Added the option to pass materials to background models, per the following

method:

# Acoustic isotropic material material_sandstone = material.acoustic.Velocity.from_params( rho=1500, vp=1875 ) # Or something more exotic material_weird_sandstone = material.elastic.triclinic.TensorComponents( ... ) mc_sandstone = sn.ModelConfiguration( background_model=sn.model.background.homogeneous.FromMaterial( material_sandstone ) ) sc_sandstone = sn.SimulationConfiguration( name="sandstone", model_configuration=mc_sandstone, ... )

Only supports homogeneous and not location-dependent materials.

SalvusMesh

Added

find_side_sets_enclosing as a method to meshes to find a all side sets

that enclose the mesh, by first finding all planar surfaces and constructing

the remainder surface from all unclaimed facets.SalvusProject

Fix a bug where the ocean layer would not be positioned correctly in cartesian

domains with strict top and bottom domain boundaries.

SalvusProject

Salvus now supports custom data normalization functions for use in misfit

computations and inverse problems.

def forward_norm(data_synthetic, data_observed, sampling_rate_in_hertz): ... def jacobian_norm( adjoint_source, data_synthetic, data_observed, sampling_rate_in_hertz ): ... norm = sn.TraceNormalization(forward=forward_norm, jacobian=jacobian_norm) p += sn.MisfitConfiguration( ..., normalization=norm, )

SalvusProject

Added optional

events as a setting to p.viz.nb's misfits, to visualize

misfits only for selected events. If not passed, to previous default of all

events will be used. Also allows silent query for p.simulations.query(),

useful when calling query often and keeping output down.SalvusProject

Added

fast_unsafe as a setting to p.viz.nb's shotgather and

custom_gather, to visualize data not on the most dense but on the most sparse

time axis of the selected datasets. This might alias data.SalvusProject

Fix a bug when computing misfits would not honor

receiver_sampling_rate_in_time_steps of a simulation configuration. By

default, Salvus will now drop the final data point if it does not lie on the

regular time grid of the subsampled simulation output, e.g. with 100 time

steps, but with a receiver sampling interval of 11. These are the sampling

rates that can be set via:sn.WaveformSimulationConfiguration( receiver_sampling_rate_in_time_steps=11, ... )

To recreate the previous behavior, use

EventData.get_waveform_data(..., enforce_regular_time_grid=False), but note

that the resulting data will only be regularly spaced for all but the last

sample.EventData's

get_time_axis_from_meta_json now optionally allows passing which

output type the time axis needs to be retrieved for, defaulting to the

simulation's time axis (no subsampling).SalvusProject

Improve the error reporting for re-running simulations in SalvusProject with

modified outputs in the

extra_output_configuration.SalvusProject

Improve the error message when trying to load a project from a path that either

does not exist or is not a directory.

SalvusProject

Speed up the generation of input files and hashes of simulations.

This should give a noticeable improvement for simulations with event-dependent

sources and many events.

In addition, a utility to simultaneously query the output directories for

several events has been added:

p.simulations.get_simulation_output_directories( simulation_configuration="my_simulation", events=p.events.list() )

Changes to Experimental Features in 2024.1.1

SalvusMaterial

Added generic

salvus.material.from_params() and

salvus.material.from_dataset() methods that will automatically find and

initialize the appropriate material class.SalvusProject

Salvus now supports a new way of launching simulations using

p.simulations.run(). This function waits for all simulations to finish before

returning and enables a new way of parallelism.In addition to specifying the site configuration with

sn.SiteConfig, this new

function can also run several simulations in parallel on a local (or ssh)

system, which can give a substantial speed up for use cases with many small

simulations.Usage example:

task_chain_config = TaskChainSiteConfig( site_name="test_site", number_of_parallel_workers=5, ranks_per_salvus_simulation=2, max_threads_per_worker=2, shared_file_system=True, ) p.simulations.run( events=p.events.list(), simulation_configuration="my_simulation", site_config=task_chain_config, )

The feature is currently still experimental. Additional output settings are not

yet supported and we recommend using

p.simulations.launch() for those

instead.Salvus version 2024.1.0

Released: 2024-05-15

This is a major release which brings in several important changes. Salvus now

natively supports Windows, which required a lot of modernizations and changes

under the hood. However, we've been able to largely maintain backwards

compatility with the existing API.

See here for the installation

instructions.

Further notable changes include:

- The dependency to

libmpicxxhas been removed. Salvus runs with any ABI-compatible MPI implementation such as MPICH and only the C-interface and library is required at runtime. - A versioning scheme for organizing a project's internal files has been introduced.

- A major upgrade of the internal meshing routines for layered models and an overhaul of the implementation of linear solids.

Additional new features and bug fixes are listed below.

If you have any questions or concerns about upgrading, please reach out in the

user forum or contact [email protected] .

SalvusCompute

Upgrade to PETSc 3.20.5 and remove dependency on

libmpicxx.SalvusFlow

Fix a bug in

salvus-cli status that would throw an index error when trying

to query empty job arrays from the database.SalvusFlow

Adjoint sources can now be computed for receiver channels.

SalvusFlow

Updated the Salvus Jupyter notebook snippets collection. It can be

accessed by executing the following in a Jupyter notebook:

%load_ext salvus %salvus_snippets

SalvusMesh

API CHANGE

Add utilities for converting attenuation parameters and unify the handling of

linear solids.

The class

sn.simple_mesh.linear_solid.LinearSolid has been removed and

superseded. The class sn.LinearSolids can be used directly for a constant Q

approximation. Alternatively, and for more fine-grained control, the

coefficients of the standard linear solids (SLS) can be obtained using

salvus.material.attenuation.lsqr_fit_q_factor_model.SalvusMesh

Addition of algorithms to modify side sets in

salvus.mesh.algorithms.unstructured_mesh_utils: retain_unique_facets,

uniquefy_side_sets, get_internal_side_set_facets,

disconnect_along_side_set.SalvusMesh

Allow one to compute normal vectors and related rotation matrices for side sets

of a mesh.

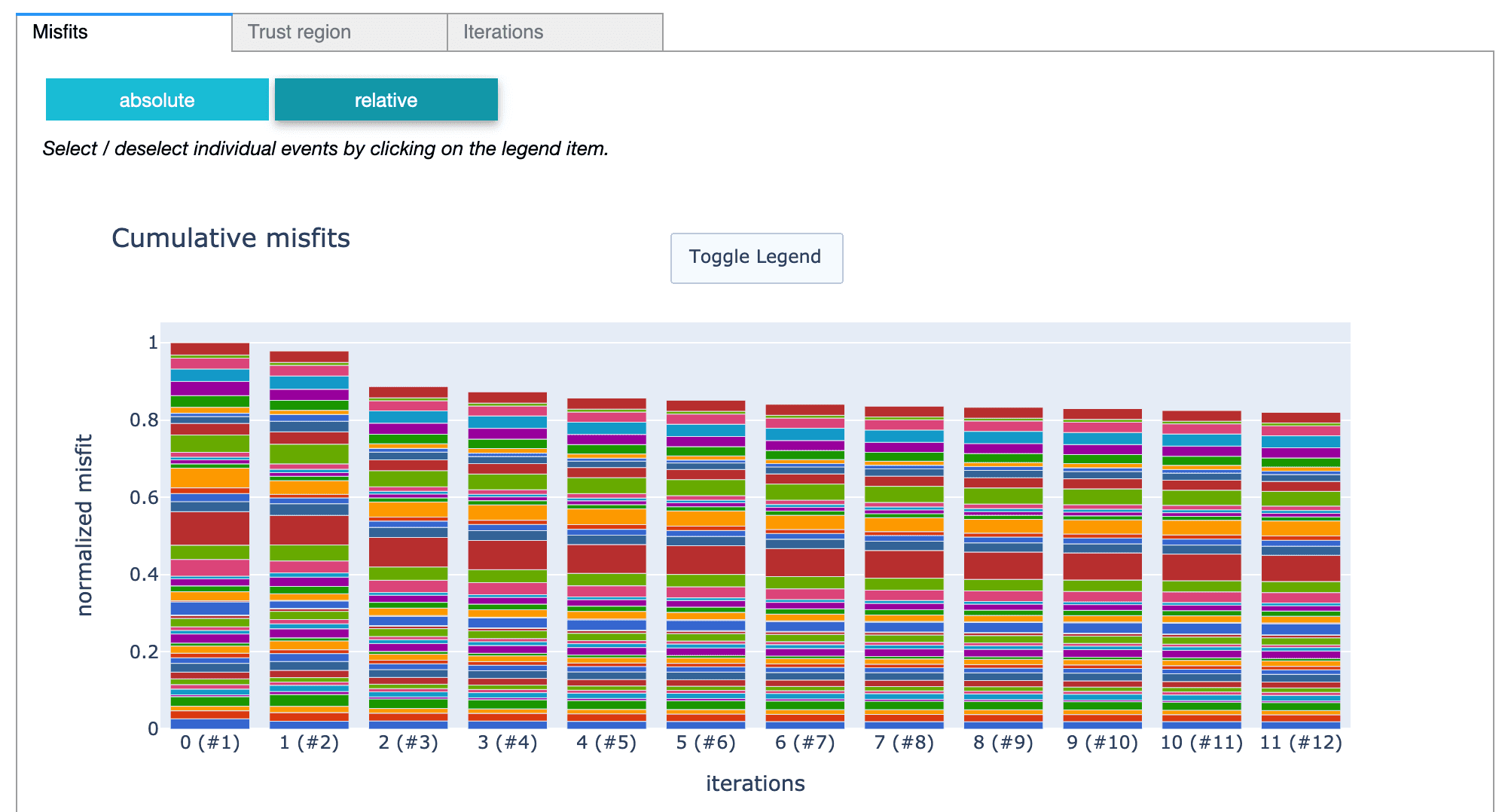

SalvusProject

Add methods to get all misfits of inversion and method to get all

inverse_problem_configurations, respectively

p.inversions.get_misfits()

and p.entities.get_inverse_problem_configurations().SalvusProject

The default seismology data processing function shows a lot of warnings of the

external library

ObsPy. If the wurlitzer library is installed, it will now

be used to suppress these warnings.SalvusProject

Start versioning the file structure of SalvusProject. User are able to migrate

from an older version of the file structure to a newer one.

Salvus version 0.12.16

Released: 2024-02-26

This is a minor release with several quality of life improvements and minor bug

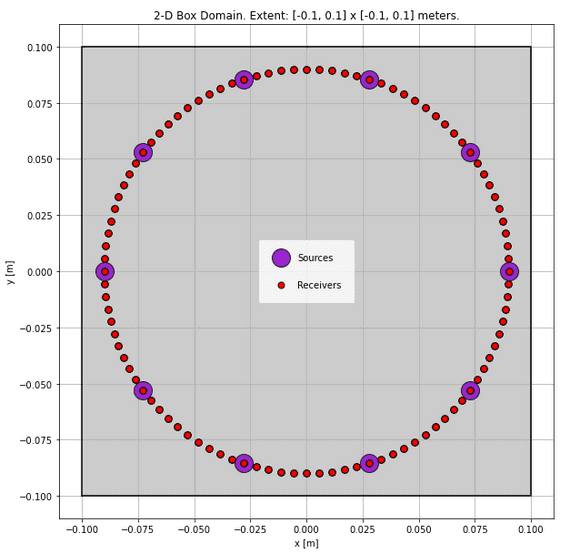

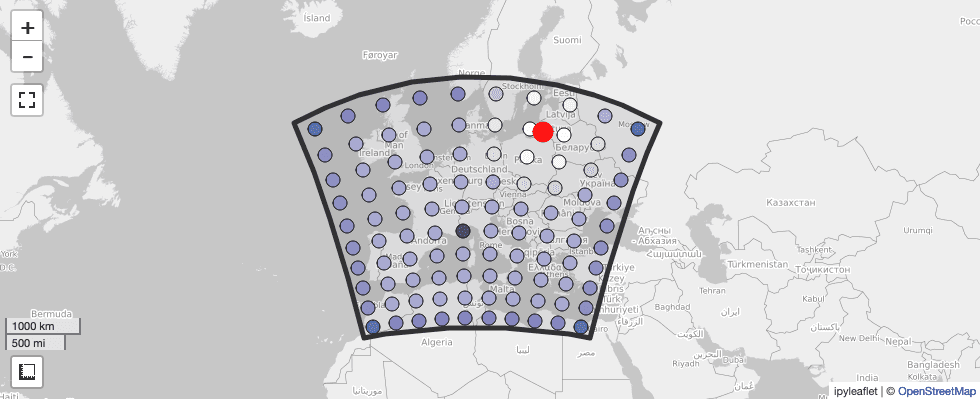

fixes as listed below. New features include receiver channels for modeling

ultrasonic sensors, better handling of volume and surface data, transformations

for moment-tensor sources in UTM domains, and utilities to preprocess layered

models.

SalvusFlow

Added spherical to UTM moment tensor transformations, both ways.

SalvusFlow

Added the option to combine data from several receivers into

a single output channel. Each channel is a weighted sum

of the individual, potentially time shifted, receiver signals.

SalvusFlow

Improve error messages in case retried operations fails.

SalvusFlow

Better surface and volume output handling by the EventData object for advanced

usage patterns Salvus:

- New

EventData.get_wavefield_output()method parsing outputs toWavefieldOutputobjects. - New

EventData.get_associated_salvus_job()method returning a potentially still existing Salvus job for theEventDataobject. - New

EventData.get_remote_extra_output_filenames()method to get paths of remote output files. - New

EventData.download_extra_outputs()method to download remote wavefield output data. - New

EventData.delete_associated_job_and_data()method to delete potentially still existing remote wavefield data.

SalvusFlow

Fix a bug in computing the suggested start and end times of analytic source

wavelets (e.g. Ricker, GaussianRate) with time shifts.

Previously, when specifying custom time shifts to analytic wavelets, the time

shifts where not reflected in the auxiliary functions to plot the wavelet.

This did not affect the actual start and end times used in the simulation.

SalvusMesh

This change brings in three utilities for working with layered models. In

particular, they are:

split_layered_model: This function allows for a layered model to be split into two based on conditions targeting the model's interfaces or materials.flood: This function allows for a layer's material parameters to be "flooded", or extruded, in the vertical direction.blend: This function supports the blending of two materials, and currently supports the averaging of constant parameters, as well as the linear or cosine-taper-based blending of discrete models along their vertical coordinate.

Additional details and documentation can be found along with the functions

themselves in the

salvus.mesh.layered_meshing.utils module.SalvusMesh

Add support for

numpy>=1.26.0 and xarray>=2023.10.SalvusProject

Bugfixes and utility functions that simplify adding new events to ongoing

inversions. Will soon be demonstrated in

this tutorial.

SalvusProject

SalvusProject now tracks the

extra_output_configuration argument passed

to p.simulations.launch(). It will detect if the simulations have

previously been run with different output settings and will optionally

overwrite existing results.SalvusProject

Add a check in the window picking algorithm to ensure the time axis of the

observed data is consistent with the synthetics. Traces for which the observed

data do not cover the entire simulated time interval will be ignored during

window picking, and the stations will be written to a log file.

Such situations previously raised a

ValueError which was hard to recover

without manually removing the delinquent stations from the observed data.SalvusProject

Fix a bug in

get_misfit_comparison_table(), which would trigger an exception

when passing the same data for comparison multiple times.Salvus version 0.12.15

Released: 2023-11-26

This is a minor release with several quality of life improvements and minor bug

fixes as listed below. New features include the ability to customize shotgather

plots and enabling the static output of the wavefield at final time.

This is the first release that supports Python 3.11.

SalvusCompute

Add the ability to output the final state of a simulation. Using a new output

key

final_time_data it is possible to output the primary fields

(displacement, velocity, phi, phi_t) at final time on an unstructured

mesh. The output file will thus be independent from the partition and number of

ranks used in the simulation.Example as part of the

extra_output_configuration in p.simulations.launch:extra_output_configuration={ "final_time_data": {"fields": ["displacement"]}, },

or directly in the

simple_config simulation object:w.output.final_time_data.format = "hdf5-minimal" w.output.final_time_data.fields = ["displacement"] w.output.final_time_data.filename = "final_time.h5"

SalvusFlow

Fix issue with how cancelled job arrays are represented in the internal job

database.

SalvusFlow

Enable site configurations with a high number of default ranks. This was

previously limited to 100. While we still recommend using a small number here,

it is now possible to go up to 1024 ranks.

SalvusMesh

Fix a problem with the notebook mesh widget. It now properly display meshes

with few and thin elements in 2-D and 3-D.

SalvusProject

Added verbosity parameter to all methods creating a Salvus Project.

SalvusProject

API CHANGE

Added custom gather plots to Salvus Project, which allows one to sort, filter,

or use self defined operations on receivers, to collectively plot multiple

events and datasets to plots them. Note that this also changes the signature of

the existing

shotgather; it will no longer accept width, height and DPI, but

instead accepts a dataclass with those fields, or an existing axes, passed as

plot_using.SalvusProject

Support for xyzservices >= 2023.10.1 as well as unifying Salvus' approach to

web basemaps.

SalvusProject

Add a new

compute_max_distance_in_m() function that computes the maximum

distance between any two points in a given set in Cartesian or spherical

coordinates.Additionally a new

.estimate_max_travel_distance_in_m() method is available

for all domain object utilizing the new function. This is useful for example to

judge the approximate time waves will take to travel through a given domain.Salvus version 0.12.14

Released: 2023-09-11

This is a minor release with small improvements listed below. Additionally,

the documentation of the Python API has been improved.

SalvusMesh

Add the ability to interpolate custom fields when performing mesh-to-mesh

interpolation:

from salvus.mesh.tools.transforms import interpolate_mesh_to_mesh int_mesh = interpolate_mesh_to_mesh( ..., fields_to_interpolate=["custom"], )

SalvusProject

Add the option to plot the coordinates of an

EventBlockData object in a

custom axes frame.SalvusProject

Ensure compatibility with

pandas>=2.1.0.SalvusProject

Fix a bug in parsing events from ASDF files, which caused a

ValueError in

some cases when adding events through p.actions.seismology.add_asdf_file().Salvus version 0.12.13

Released: 2023-07-04

This is a minor release with a few bug fixes listed below.

SalvusMesh

When building meshes with exterior domains for modeling the gravitational

potential, all material parameters will automatically be set to zero.

Furthermore, the exterior is assigned a unique

layer id, which increments

the layer id at the surface by one.SalvusProject

Fix a bug in the visualization widget of an inverse problem, which could cause

a

ZeroDivisionError if there were one or more events without windows in the

data selection.SalvusProject

Fix a bug in the window picking algorithm which caused a

TypeError for

constant traces.Salvus version 0.12.12

Released: 2023-05-31

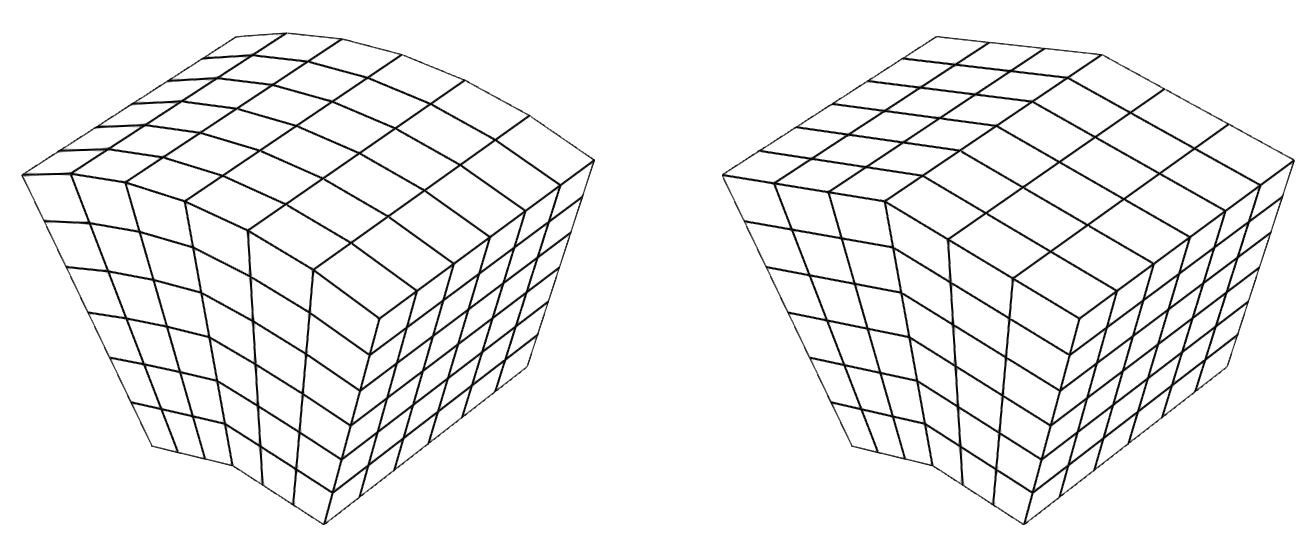

This release brings in a new backend of the Cartesian meshing algorithms.

Those changes do not affect the API. In some scenarios, the resulting mesh

can change slightly, because of the improved meshing algorithms. If you

notice undesired changes, please reach out in the user forum or message

[email protected]

This is also the first Salvus release that supports Python 3.9. You can choose

the Python version from the interactive menu in the downloader.

More information can be found here

Note that Python 3.7 will reach its end-of-life at the end of June 2023.

SalvusFlow

The

salvus-cli upgrade command now works in cases where the upgrade changes

the initialization logic of Salvus.SalvusMesh

API CHANGE

BM files parameterized using depth now explicitly need a model value specified

at depth = 0. This is to avoid ambiguities that could otherwise occur in the

vertical span of the mesh.

SalvusMesh

The backend Cartesian meshing algorithms have been improved, and this may result

in changes to some meshes. If you notice significant undesired changes, or

encounter and other problems, please contact us.

SalvusMesh

API CHANGE

The

salvus.mesh.chunked_interface.create_mesh_chunkwise() function is now

deprecated.Salvus version 0.12.11

Released: 2023-05-09

Minor release with a few bug fixes and other small improvements listed below.

Highlights of new features include the ability to output volumes and surfaces

on a subinterval of the simulation time axis, improved functionality to

extrude meshes and an experimental feature to invert for the source wavelet.

SalvusCompute

Adds the ability to output surface and volume fields only between certain times.

To access this functionality, the

start_time_in_seconds and

end_time_in_seconds can now be passed to the respective output groups

in a simulation.Waveform(...) object. Times passed are always truncated

(floored) to the preceding time step.SalvusCompute

Fix a bug in computing gradients with respect to the C_ij components of the

elastic tensor in fully anisotropic 3D media..

SalvusFlow

Fix a regression where sources and receivers could not longer be specified as

dictionaries.

SalvusFlow

Enforce that each type of boundary condition can only be added once to a

simulation object to ensure that the boundary conditions are treated

properly in SalvusCompute.

w = sn.simple_config.simulation.Waveform(mesh=m) # If all settings for the same type of boundary condition are the same, # the side sets will be merged. w.add_boundary_conditions([ sn.simple_config.boundary.HomogeneousDirichlet(side_sets=["x0"]), sn.simple_config.boundary.HomogeneousDirichlet(side_sets=["x1"]) ]) # However, using different settings for a boundary type will raise an error. w.add_boundary_conditions([ sn.simple_config.boundary.Absorbing( side_sets=["x0"], taper_amplitude=0.0, width_in_meters=0.0 ) sn.simple_config.boundary.Absorbing( side_sets=["x1"], taper_amplitude=1.0, width_in_meters=1.0 ) ])

This is now part of the validation of the simple config objects.

SalvusFlow

Fix a bug that could cause a failing license request when using tokens in

adjoint simulations.

SalvusMesh

The mesh notebook widget will now initialize its camera to point at the center

of spherical chunks.

SalvusMesh

Higher-order meshes can now be extruded from 2-D to 3-D.

SalvusMesh

Material parameters will now also be extruded when meshes are being extruded.

SalvusProject

Adding support for ipywidgets8.

SalvusProject

API CHANGE

The window picking algorithm for seismology now correctly handles cases where

the event origin time is not the time of the first sample of the synthetics.

A consequence of this is that custom window picking functions must now also

have a

event_origin_time keyword argument. We believe this should not affect

any users, if it does: the error message is very clear and fixing it is

straight forward.SalvusUtils

Add a new algorithm that groups elements by their number of occurrences before

performing the wavefield output tensor contraction. This should be close to the

most efficient approach in all cases, so it is also now the default when

extracting a

WavefieldOutput object to an xarray.Changes to Experimental Features in 0.12.11

SalvusModules

Adds the ability to invert for source characteristics for single-component

(acoustic) data. There are currently two interfaces for performing such a

source inversion in the

salvus.modules.source_inversion submodule by using

one of the following functions:invert_wavelet: Accepts the observed and synthetically generated data as NumPy arrays and returns the inverted wavelet as a NumPy array.invert_wavelet_from_event_data: Performs the same inversion as withininvert_wavelet, but acceptsEventDataobjects instead of NumPy arrays. This is generally the more convenient approach when used together with SalvusProject.

Note that inverting for multi-component data is currently unsupported.

Salvus version 0.12.10

Released: 2023-03-15

First release of 2023 with several small improvements in various components

of Salvus. Details are listed below.

SalvusCompute

Add support ASDF output for receivers that only differ by location code.

SalvusFlow

There is a a new

custom_commands setting for Slurm sites to add custom

bash commands to a job script.SalvusMesh

Add function to compute the density model based on Gardner's relation,

and support customizable Gardner's constants alpha and beta.

SalvusMesh

Adds a new function

mesh.adjust_side_sets.adjust_site_set that allows for the

re-interpolation of a mesh's vertical side set to a new digital elevation model,

potentially at a higher order than originally used. This can be useful, for

instance, when changing frequency bands and taking advantage of the smaller mesh

size to better resolve topography, changing a mesh's model order, or

re-interpolating after adding local refinements. This re-interpolation also now

happens by default when using the layered meshing interface.SalvusMesh

Both 2- and 3-d anisotropic acoustic models can now be specified in terms of

velocities (rho, vph, and vpv). Note that this parameterization implies that

epsilon = delta.

SalvusModules

Throw a descriptive error message when trying to use the waveform database

with unsupported receiver types.

SalvusProject

Enable passing start and end time for computing the discrete Fourier

transform as extra output configuration in SalvusProject.

Example:

p.simulations.launch( simulation_configuration="my_simulation", events=p.events.list(), ranks_per_job=2, site_name="local", extra_output_configuration={ "frequency_domain": { "frequencies": [10.0], "fields": ["phi"], "start_time_in_seconds": 1.0, "end_time_in_seconds": 1.1, } } )

SalvusProject

Fix a bug that would prevent launching simulations of events without receivers

in SalvusProject. This can be useful, for instance, if only volumetric output

is desired.

SalvusProject

Stability improvements in the receiver weighting algorithms in case a

particular event has no or very little windows.

SalvusUtils

Add a new

salvus.utils.logging.log_timing() context manager that logs the

time the context takes to execute.SalvusUtils

Adds the ability to properly handle surface output in the

salvus.toolbox.helpers.wavefield_output module. To use this, one simply needs

to pass "surface" as an output type when loading the wavefield output. Also

added the ability to drop dimensions from outputs, so 3-D surface outputs can be

visualized in 2-D planar plots (such as in matplotlib). This can be accessed by

calling the .drop(...) function on a WavefieldOutput instance.Salvus version 0.12.9

Released: 2022-12-22

This is a minor release that fixes a few bugs as well as adds some new

features. Most notable of these new features are a) an improvement to

SalvusCompute's time-step computation algorithm which now takes into account

the influence of any absorbing boundary layers, which may improve the stability

of some simulations, and b) a new set of functionality to interpolate

time-dependent volumetric wavefield data onto a set of arbitrary points,

including regular grids, for the purpose of simplyfing the analysis of such

data.

SalvusCompute

Add a new heuristic for computing the time step inside the sponge layers of the

absorbing boundaries. The previous logic required a more conservative choice

of the Courant number to guarantee stability of the simulation.

SalvusMesh

Add a new free function

name_free_side_set to salvus_mesh_utils. This

function finds all mesh surfaces not currently assigned to a side set and either

a) creates a new side set with the name passed, assigning the found surfaces, or

b) appends to an existing side set of the same name if it already exists in the

mesh.SalvusModules

Add safeguards to properly handle two edge cases in the point-to-linesource

conversion utility.

SalvusUtils

Adds new functionality in

salvus.toolbox.helpers.wavefield_output to help

encapsulate and manipulate raw volumetric wavefield output from SalvusCompute.

Additional functions allows for the extraction of time-dependent wavefield data

from said files to regular grids in space and time.Salvus version 0.12.8

Released: 2022-11-21

This is a minor release featuring a few bug fixes and additions detailed below

to improve usability and performance.

Additionally, several improvements to the internal meshing algorithms have

been made.

SalvusCompute

Bug fix for reading in custom source time functions from hdf5 in SalvusCompute.

This could cause an interpolation error when providing input on an oversampled

time axis.

SalvusCompute

Enable new field

frequency-domain for (time-dependent) volume and surface

data. In combination with the static frequency-domain output, this enables

storing the time-evolution of the discrete Fourier transform with the

polynomial degree of the SEM shape functions.SalvusFlow

There is a a new

job_script_shebang setting for Slurm sites to allow

overwriting the job script shebang.SalvusMesh

Performance improvement in

apply_element_mask, which can lead to significant

speed-ups for meshes that neither have nodal parameters nor layered topography.SalvusOpt

Enable optional additional outputs when computing the optimal transport misfit.

If requested, the distance matrix and the optimal assignment will be returned,

which is useful for visualizing the optimal transport map.

SalvusProject

Enable chunkwise job submission in task chains.

The optional parameter

max_concurrent_chains can be passed to the

TaskChainSiteConfig to limit the maximum number of concurrent chains.

Note that when using more than one site, this parameter has to be consistent

for all sites in the current implementation.SalvusProject

Add ability to cancel ongoing or pending simulations in a project.

Example:

p.simulations.cancel( simulation_configuration="my_simulation", events=p.events.list() )

Alternatively,

p.simulations.cancel_all() will cancel all simulations from

the simulations store.Salvus version 0.12.7

Released: 2022-10-12

This is a minor bugfix / maintenance release that makes a few of the internal

meshing algorithms more robust.

Salvus version 0.12.6

Released: 2022-09-29

New features introduced with this release include callback functions

to implement event-dependent meshes and iteration-dependent event

selections for mini-batch inversions. Furthermore, an initial implementation

of a new workflow for computing forward simulations, misfits and gradients

within a single chain of tasks is introduced.

Additionally, the release contains various minor bug fixes and small

improvements listed below.

SalvusCompute

Allow for an axis-aligned shortcut for absorbing boundary attachment. Useful in

cartesian domains, and specified via passing "side_sets_are_axis_aligned" to

the simple config

AbsorbingBoundary constructor.SalvusFlow

Use

shutil.copyfile() instead of shutil.copy() and shutil.copy2() to

avoid problems copying between file systems with and without permission bits.SalvusFlow

The data selection can now properly deselect data whose processing raises an

exception for only a few receivers. This usually only happens with faulty

receiver metadata.

SalvusOpt

Add new option

use_event_dependent_gradients for the trust-region method.

When set to True, only the accumulated gradient for all events is used, and

tasks of type TaskMisfitsAndSummedGradient are issued instead of

TaskMisfitsAndGradients.SalvusOpt

Add a new option for

discontinuous_model_blocks to the mapping function.

When used in combination with a homogeneous scaling, this function allows

for the parameterization of piecewise constant models.SalvusProject

Upgrade to

ipywidgets>=8.0.0. The changes are backward compatible with older

versions of ipywidgets.SalvusProject

Add functionality to check the trial model on subsets of events (including

none). The list can be adjusted by using the optional argument

control_group_events in the constructor of an iteration.SalvusProject

New optional parameter

max_events_per_job_submission for inversion action

component to limit the maximum number of events that are simultaneously

submitted per job to reduce the memory overhead.SalvusProject

Add an optional callback function

event_batch_selection to the

InverseProblemConfiguration, which allows to define an iteration-dependent

selection of events and/or control group to check the trial model only for a

subset of events.SalvusProject

Implement gradient mapping for event-dependent meshes to enable their use

for inversions.

SalvusProject

Mitigate open nfs file handles when deleting simulation results.

SalvusProject

Integrate using event-dependent meshes with task chains.

This also adds a faster way to compute misfits without gradients.

Changes to Experimental Features in 0.12.6

SalvusProject

Initial implementation of task chains for solving inverse problems.

The

job_submission settings can now optionally receive a TaskChainSiteConfig

to compute misfits in parallel, and to chain forward and adjoint simulations.This is an experimental feature which is currently restricted to remote sites

which share the same file system and the DataBlockIO backend of events.

Salvus version 0.12.5

Released: 2022-08-25

Minor release containing several performance improvements in handling FWI

workflows and a few bug fixes. Details are listed below.

From this version onward, Salvus drops support for Centos6 and older systems.

SalvusCompute

From this release onwards the oldest supported CentOS version is Centos7.

Salvus will not run on Linux systems with an older libc anymore.

Centos6 reached End of Life (EOL) in November 2020. In case you still have a need

for running Salvus on an old operating system, please get in touch with

[email protected]

SalvusCompute

Fix a bug in the material parameterization of 3D anisotropic elastic material.

When using the full tensor (Cij) it is no longer necessary to pass a symmetry

axis with the mesh.

SalvusFlow

New event block data structure to more efficiently deal with large-scale

acquisition geometries and a corresponding way to deal with them in

SalvusProject.

SalvusFlow

New task chain controller and runner primitives to efficiently steer and

parallelize a large collection of task chains across different machines.

SalvusMesh

Two small bug fixes in the mesh widget. The colorbar is now properly scaled

for nearly constant parameter fields. Furthermore, it is possible to switch

parameter fields while the side-sets toggle is active, which previously raised

an error.

SalvusProject

Add new interal functionality to efficiently operate on structured receiver data.

This includes resampling, tapering, and padding.

SalvusProject

Generalized external data proxies to read data from arbitrary external data

sources without copying them into a Project.

SalvusProject

Assorted file format utilities, helpers, and converters.

SalvusProject

Automatically validate that all side-sets required for surface output or

boundary conditions are contained in the mesh.

SalvusProject

Implement a masking function as an optional callback of the simulation

configuration. This enables using event-dependent meshes for forward

simulations. This feature is currently not supported for assembling gradients.

SalvusProject

Fix a bug which could cause simulation results ending up in the wrong

directory when the event names were not padded with zeros.

SalvusProject

Performance improvements for misfit computation workflows in projects with

a large number of events.

Salvus version 0.12.4

Released: 2022-07-28

Minor release that brings in support for using strain and gradient measurements

in full-waveform inversion. Furthermore, the ability to read (adjoint) sources

in chunks was added to alleviate the memory requirements for simulations with

many sources or time steps.

There are a few more small improvements and bug fixes listed below.

SalvusCompute

Support the partial loading of sources into memory. Can be important for

simulations with lots of sources and / or a long duration.

SalvusFlow

New

omit_tasks_per_node setting for Slurm sites.This will cause the

ntasks-per-node to be omitted for both the #SBATCH

command as well as the call to srun for the rare site where this is

necessary.SalvusProject

Fix a bug in the bandpass processing fragment, which did not recognize

frequency inputs in scientific number format.

Here is an example of a data name:

"EXTERNAL_DATA:raw_data | bandpass(1.0e3, 2.0e3) | normalize"

SalvusProject

Enable the use of misfits and adjoint sources based on first spatial

derivatives of the wavefield, i.e., strains and gradients.

It is now possible to also pass

strain, gradient-of-displacement and

gradient-of-phi as valid receiver fields in the misfit configuration and

related event data and event misfit objects.Remember that strain data are not rotated and output is in Cartesian

coordinates.

SalvusProject

API CHANGE

Rename datasets (and derived channel names) in ASDF output for gradients of the

displacement field to comply with the SEED convention.

This is a (small) breaking change that only affects the combination of ASDF

files with outputting the gradient of the displacement field.

The names of datasets and channels need to be adjusted as follows:

2D (old):

XXX, XXY, XYX, XYY2D (new):

XG0, XG1, XG2, XG33D (old):

XXX, XXY, XXZ, XYX, XYY, XYZ, XZX, XZY, XZZ3D (new):

XG0, XG1, XG2, XG3, XG4, XG5, XG6, XG7, XG8Apologies for the inconvenience caused.

SalvusProject

Fix a bug in

p.viz.waveforms(), which caused to function to fail with a

cryptic error message when data was passed as a string.Salvus version 0.12.3

Released: 2022-07-03

This release introduces new modules or improved support for several physics in

the simulation engine. This includes anisotropic acoustic media, native

support for visco-acoustic simulations, and a new solver model for static

problems to solve the Poisson equation. Some of the new features are currently

only supported on CPU hardware.

Additionally, the release enables custom MPI commands, for instance

to use machine files on remote machines and contains several small bug fixes.

SalvusCompute

Support waveform simulations and gradients w.r.t. medium properties in

VTI acoustic media.

SalvusCompute

Support visco-acoustic modeling in the scalar wave equation.

The implementation and API are very similar to visco-elastic modeling, and

require the specification of linear solids and

QKAPPA as a material

parameter.Previously, attenuation in a fluid could only be modeled as a degenerate case

of elastic physics.

This feature is currently only supported on CPUs and SalvusCompute will

throw an error when attempting to run a visco-acoustic simulation on a

CUDA-enabled site.

SalvusFlow

API CHANGE

Extend functionality of handling segy files for adding external data to a

project.

Instead of just passing the source mechanism, users can now define simple

callback functions for sources and receivers to create Salvus objects from

the information stored in segy files. This breaks the previous syntax of

the implementation of

SegyEvent, but it should be straightforward to

upgrade.SalvusFlow

Minor improvement in the SSH connection error handling. It should now report

a better error message.

SalvusFlow

Extra keyword arguments can now be passed to

paramiko.SSHClient.connect()

to allow more fine tuning for some SSH connections.Usage in the site config file:

[sites.my_site.ssh_settings] hostname = "some_host" username = "some_user" [sites.my_site.ssh_settings.extra_paramiko_connect_arguments.disabled_algorithms] pubkeys = ["rsa-sha2-512", "rsa2-sha2-256"]

Furthermore the

init-site command now has a --verbose flag to facilitate

debugging tricky connections:salvus-cli init-site my_site --verbose

SalvusFlow

A new

mpirun_template parameter for ssh and local site types that allows

full customization of the actual call to mpirun in case it is necessary.Usage in the site config file:

[sites.my_site.site_specific] mpirun_template = "/custom/mpirun -machinefile ~/mf -n {RANKS}"

This example will thus use a custom

mpirun executable with a non-standard

argument for all Salvus runs on that site. The {RANKS} argument will be

filled in by Salvus with the number of ranks for each simulation.SalvusProject

Changes to the event configuration in

UnstructuredMeshSimulationConfiguration

objects are now properly recognized when trying to overwrite an existing

configuration of the same name.Changes to Experimental Features in 0.12.3

SalvusCompute

Add a new physics module to solve the Poisson equation.

This is the first elliptic PDE that can be solved with Salvus.

The Poisson equation is useful for simulatingthe gravitational potential

of a planetary object as well as computing correct inner products and

regularization terms in the Sobolev space .

This feature is currently only supported on CPUs and is considered

experimental.

Salvus version 0.12.2

Released: 2022-05-31

Minor update with a new model class based on xarray

to invert for structured data in SalvusOpt. Furthermore,

we extended the options for passing callback functions to

SalvusProject.

SalvusOpt

Add new model class

StructuredModel to SalvusOpt to invert models

parameterized on a regular grid using xarray.Datasets.SalvusProject

The function serialization can now also deal with functions passed in

closures.

Salvus version 0.12.1

Released: 2022-05-16

This release provides several small improvements

for making inversion workflows more resilient and more robust.

Furthermore, we added easier support for arbitrary boundary conditions

through the

WaveformSimulationConfiguration.SalvusFlow

Add an normalization option for the misfit and adjoint source

computation.

The adjoint source is aware of this operation and Salvus will make sure it is

correct.

SalvusOpt

Add basic timing statistics for the tasks of an iteration.

A summary is printed in the

Stats tab of the iteration widget.SalvusOpt

Reduce the memory overhead of dealing with many event-dependent gradients.

SalvusProject

Add support for specifying boundary conditions in the

WaveformSimulationConfiguration. This is useful for Dirichlet-type

boundaries or absorbing boundaries of a

UnstructuredMeshSimulationConfiguration.

Boundaries specified here will be applied in addition to ocean load and/or

absorbing boundaries specified as AbsorbingBoundaryParameters in the

SimulationConfiguration. A ValueError is raised for duplicated conditions

on a side set.SalvusProject

Add ability to recover from failed preconditioner tasks and provide error

logs of failed smoothing jobs.

SalvusProject

Add ability to recover from failed misfit computations. If a misfit computation

fails for one more events during

compute_misfits, the simulation results are

considered corrupted and will be automatically deleted. This means that those

simulations will be resubmitted when calling compute_misfits again.This helps, for instance, to recover from corrupted ASDF files in case they have

not have been written or downloaded correctly.

Salvus version 0.12.0

Released: 2022-04-30

This is a new major release which comes with a big

portion of internal changes.

Fortunately, there are only a few breaking changes on the user-facing API,

which are mostly related to custom meshing functionality.

We've also used the opportunity to remove some deprecated functionality of the

inversion component, and added several improvements related to resilience and

performance.

SalvusFlow

Will now reinitialize the SSH site in case a socket has been closed.

SalvusFlow

Better sharing of existing SSH connections. Should reduce the number of

necessary SSH connection reinitializations.

SalvusFlow

Added timings to the individual tasks of a

TaskChain.SalvusMesh

API CHANGE

Removed the

StructuredGrid2D, StructuredGrid3D and Skeleton classes and

replaced them with new MeshBlock and MeshBlockCollection classes.This should have a minimal influence on most users - please contact us if

you experience any issues.

SalvusOpt

Performance improvement of the adjoint mapping function when using cutouts

around source or receiver locations.

SalvusProject

Remove job submission settings from the inversion routines

iterate() and resume(). Specifying site_name,

ranks_per_job and wall_time_in_seconds_per_job is no longer

supported. Instead, the job_submission_settings either need to be passed to

the constructor of the InverseProblemConfiguration or by using

p.inversions.set_job_submission_configuration().SalvusProject

Add option to query simulations for specific compression settings.

Example:

p.simulations.query( simulation_configuration="my_simulation", misfit_configuration="my_misfit", wavefield_compression=sn.WavefieldCompression( forward_wavefield_sampling_interval=15 ), events=p.events.list(), )

SalvusProject

Add the option to pass an element mask to Cartesian volume models. Useful, for

instance, when you only want to interpolate parameters onto a specific

subdomain of the mesh (i.e. only onto elastic elements).

SalvusProject

Fix a bug that could occur in very specific circumstances when overwriting

an existing bathymetry model.

Salvus version 0.11.48

Released: 2022-03-30

This release contains a long-sought feature for reducing the memory footprint

of checkpoints for adjoint simulations by sampling the forward wavefield on

a coarser grid during the adjoint run. This includes breaking changes

for some of the inversion routines, which may require updating the syntax of

some notebooks. Details are listed below. If you are concerned about

upgrading, please get in touch with us in the user forum.

The release also contains bug fixes concerning the use of custom bm files

in SalvusProject and attaching receivers on SmoothieSEM meshes, as well as

the option to provide a custom scaling for the mapping function of the

inversion.

Also, don't forget to keep your python environment up-to-date!

We recommend to always run

wget https://mondaic.com/environment.yml -O ~/environment.yml conda env update -n salvus -f ~/environment.yml

before upgrading.

SalvusFlow

New

TaskChain workflow primitive that can be used to run multiple Salvus jobs

and/or Python scripts in a linear chain within a single local/ssh/HPC job.This is a first implementation so expect some rough edges:

# Construct a forward simulation object. w_forward = sn.simple_config.simulation.Waveform( ..., store_adjoint_checkpoints=True ) # Construct an adjoint simulation object. w_adjoint = sn.simple_config.simulation.Waveform(...) ... # There is a new `PROMISE_FILE:` prefix to tell SalvusFlow that a file does # not exist yet but it will exist when the simulation is run. w_adjoint.adjoint.point_source_block = { "filename": "PROMISE_FILE:task_2_of_2/input/adjoint_source.h5", "groups": ["adjoint_sources"], } # Define a Python function that is run between forward and adjoint simulations # to generate the adjoint sources. def compute_adjoint_source( task_index: int, task_index_folder_map: typing.Dict[int, pathlib.Path] ): folder_forward = task_index_folder_map[task_index - 1] folder_adjoint = task_index_folder_map[task_index + 1] output_folder = folder_forward / "output" event = sn.EventData.from_output_folder(output_folder=output_folder) event_misfit = sn.EventMisfit( synthetic_event=event, misfit_function="L2_energy_no_observed_data", receiver_field="displacement", ) input_folder_adjoint = folder_adjoint / "input" event_misfit.write(input_folder_adjoint / "adjoint_source.h5") # Launch the task chain. It will serialize the Python function and launches # everything either locally or in Slurm/other supported systems. tc = sn.api.run_task_chain_async( site_name="local", tasks=[w_forward, compute_adjoint_source, w_adjoint], ranks=4, ) # Wait until it finishes. tc.wait()

SalvusFlow

More stable implementation of the rewritten receiver placement and it should

now also work for highly distored meshes.

SalvusOpt

Add option for custom scaling in mapping function.

The custom scaling parameters need to be defined on the same mesh as the

simulation and provided as elemental fields for all parameters.

Example

mesh = p.simulations.get_mesh("sim") # Modify scaling parameters for all fields mesh.elemental_fields["VP"] = ... mesh.elemental_fields["RHO"] = ... m = Mapping( scaling=mesh, inversion_parameters=["M"], map_to_physical_parameters={"VP": "M", "RHO": "M"}, )

SalvusProject

API CHANGE

Add proper serialization of bm files in SalvusProject.

Previously, custom bm files were not added to the project and relied on

external paths which prevented copying projects to other machines.

Adding custom background models as string will now throw a deprecation warning.

It is strongly recommended to add custom bm files using the class

model.background.one_dimensional.FromBm.SalvusProject

API CHANGE

Add option for wavefield compression during adjoint runs to reduce the

memory overhead. In order to allocate the correct number of checkpoints,

this setting needs to be available at the time of computing the forward

wavefield.

This setting can now be used in several locations listed below by adding

sn.WavefieldCompression( forward_wavefield_sampling_interval=N )

if checkpoints should be stored for a resampling interval of

N during the

adjoint run.

forward_wavefield_sampling_interval=1 corresponds to no compression and is

equivalent to what was done prior to this release.This is a (small) breaking change that requires updating the syntax of

inversion-related functionality!

new optional arguments:

The wavefield compression settings enter now as optional argument into

some objects and functions.

If not given, it will default to

forward_wavefield_sampling_interval=1,

which is consistent with Salvus <= 0.11.47.Examples:

# Inversion actions: p.action.inversion.compute_misfits( ..., derived_job_config=sn.WavefieldCompression( forward_wavefield_sampling_interval=5 ), ... ) p.action.inversion.compute_gradients( ..., wavefield_compression=sn.WavefieldCompression( forward_wavefield_sampling_interval=5 ), ... ) p.action.inversion.sum_gradients( ..., wavefield_compression=sn.WavefieldCompression( forward_wavefield_sampling_interval=5 ), ... ) # InverseProblemConfiguration sn.InverseProblemConfiguration( ... wavefield_compression=sn.WavefieldCompression( forward_wavefield_sampling_interval=5 ), ... )

deprecated parameters:

The parameters

store_adjoint_checkpoints in p.simulations.launch(...) and

store_checkpoints in p.actions.inversion.compute_misfits(...) are

deprecated. Instead, usederived_job_config=WavefieldCompression( forward_wavefield_sampling_interval=N ),

derived_job_config=None is the new default, which corresponds

to the deprecated store_adjoint_checkpoints=False or

store_checkpoints=False, respectively.breaking changes:

compute_misfits and compute_gradients in p.action.inversion are now

keyword-only functions. Additionally, we made the wavefield compression

settings mandatory arguments of a few lower-level functions, such as:p.misfits.get_gradient_filenames() p.simulations.get_adjoint_input_files() p.action.validation.validate_model_gradients()

In case you are using one of these directly, you will notice an error like

TypeError: compute_gradients() needs keyword-only argument wavefield_compression

which can be fixed by just adding the wavefield_compression object shown

above.

Salvus version 0.11.47

Released: 2022-03-23

Minor update to patch a bug introduced with 0.11.46.

SalvusProject

Fix a bug introduced with 0.11.46, which broke backward compatibility of a

project when deserializing an

UnstructuredMeshSimulationConfiguration.Salvus version 0.11.46

Released: 2022-03-18

This release comes with a fairly long list of small improvements and bug fixes

listed below.

Notable new features include (full) gradient sources for elastic simulations,

GPU support for acoustic gradient sources, better memory management of

inversions, several tweaks regarding window selection and weighting,

and a more robust and faster algorithm to attach receivers.

Thanks again to all users who reported bugs and requested features!

SalvusCompute

Added vector gradient sources for elastic media.

SalvusCompute

Scalar gradient sources now also work on GPUs.

SalvusFlow

Initializing a site with SalvusFlow now runs a test job using the

default number of ranks for that site instead of 2.

SalvusFlow

The site configuration for the GridEngine and PBS site types now allows

simplistic expressions:

[[sites.site_name.site_specific.additional_qsub_arguments]] name = "pe" value = "mpi {NODES * TASKS_PER_NODE}" [[sites.site_name.site_specific.additional_qsub_arguments]] name = "l" value = "ngpus={int(ceil(RANKS / 12))}"

SalvusFlow

Add a new

lat_lng_to_utm_crs() helper routine to directly get a pyproj UTM

CRS object from a point given in latitude and longitude.SalvusFlow

The relative source and receiver placement code is much faster now due to

algorithmic changes and code optimizations. Additionally, a few previously

uncaught edge cases now work as expected.

SalvusFlow

Added local version of all HPC sites that directly interact with the job

queuing and file systems without using SSH.

SalvusFlow

New

salvus-cli alias for the salvus-flow command line call. This will

become the default at one point.SalvusProject

Safely remove adjoint sources after the adjoint simulation.

This happens automatically after retrieving the results from the adjoint

simulation. Adjoint source files will be recomputed on-the-fly if required.

This can also be done explicitly using

p.misfits.compute_adjoint_source()

SalvusProject

Fix a bug in the hash computation for adjoint simulations that could

result in non-unique hashes in special cases.

SalvusProject

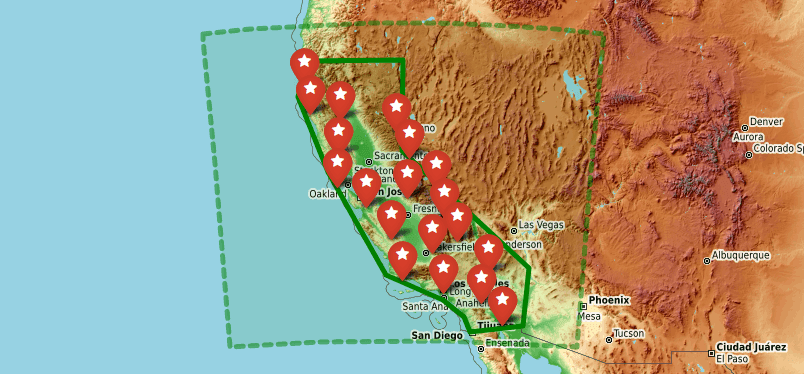

New default basemap for the seismology receiver weights and misfit maps.

Furthermore they are now configurable.

SalvusProject

The error log output of the seismological window picking routine is now more

descriptive.

SalvusProject

SalvusProject will no longer create an empty

EventWindowAndWeightSet in case

the window selection routine did not pick a single window for a chosen event.An appropriate warning message and log file entry will be created in that

case.

SalvusProject

New function to get all events with windows for a chosen data selection

configuration:

p.actions.seismology.get_events_with_windows("DSC_NAME")

SalvusProject

Added some more receiver weight validation steps and Salvus now properly deals

with a few more edge cases, for example when the total receiver weight sum of

an event is zero.

SalvusProject

UTMDomain objects no longer require the ellipsoid to be passed.Salvus version 0.11.45

Released: 2022-01-27

First release of 2022 with several small bug fixes listed below.

As a new feature, SalvusProject supports multiple source time functions for

different point sources, which facilitates simulating finite faults or

encoded sources.

SalvusCompute

Bugfix for the Clayton-Enquist absorbing boundaries in 2-D anisotropic

physics.

SalvusCompute

Fixes a performance bug for adjoint GPU runs in purely elastic media. In this

case, an unnecessary serial step is now skipped when loading checkpoints.

SalvusFlow

Enable

salvus-flow upgrade and salvus-flow upgrade-site for

double precision versions using the salvus_f64 binary.SalvusFlow

The

init-site script will wait a bit longer for the stderr in case it is

not yet available due to some synchronization delays on shared and parallel

file systems.SalvusMesh

The

UnstructuredMesh.extrude_side_set_2D() method now also works as expected

for higher order meshes. Additionally fixed an issue with inverted elements

in certain scenarios.SalvusOpt

Fix a bug in the gradient view of the iteration widget.

Now the mapped gradient accumulated from all events is correctly displayed in

the widget.

SalvusProject

Fix a bug that prevented loading an

InverseProblemConfiguration after

the project has been transferred to another python environment with a

different site configuration.SalvusProject

Enable different source time functions for event with multiple point sources.

Example:

Event( event_name="event", sources=[point_src1, point_src2], receivers=receivers, ) ... EventConfiguration( waveform_simulation_configuration=..., wavelet=[ simple_config.stf.Ricker(center_frequency=1.0), simple_config.stf.Ricker(center_frequency=2.0), ], )

Salvus version 0.11.44

Released: 2021-12-06

Minor release with a few bug fixes listed below.

Shoutout to the user community for identifying these bugs

and helping us to fix them.

SalvusCompute

Fix a bug for CPU sites where surface output with multiple side sets per element

could cause an issue in the surface wavefield output ordering.

SalvusFlow

Fix a bug in (de)-serialization of receiver objects when accidentially passing

int instead of float types.SalvusFlow

Fixing a bug when plotting adjoint sources.

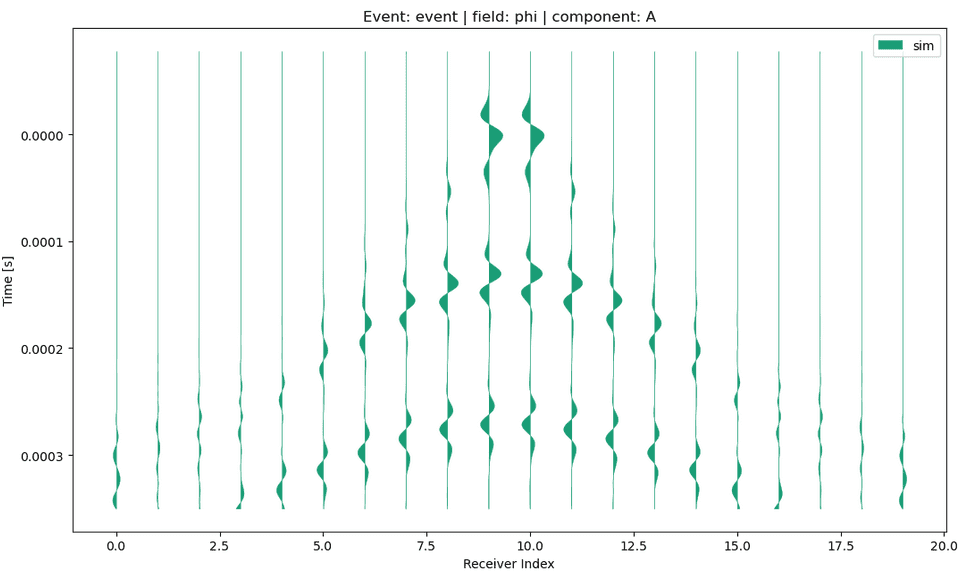

SalvusProject

Fix a bug in plotting shotgathers for single data series.

Example:

p.viz.shotgather( data="simulation", event="event", receiver_field="phi", component="A" )

Salvus version 0.11.43

Released: 2021-10-21

Minor update with a bug fix for serializing cythonized

functions.

SalvusProject

The function serialization can now serialize cythonized functions imported

from other modules.

Salvus version 0.11.42

Released: 2021-10-19

Minor update with some internal changes and

support for frequency domain output in Salvus project.

SalvusProject

Add support for frequency-domain output to Salvus project.

Optional output of the Fourier transform for a discrete set of frequencies

can now be passed as

extra_output_configuration to the launch function.p.simulations.launch( ranks_per_job=4, site_name="local", events=p.events.list(), simulation_configuration="simulation", extra_output_configuration={ "frequency_domain": { "fields": ["displacement"], "frequencies": [1.0, 2.0, 3.0], } }, )

Salvus version 0.11.41

Released: 2021-10-08

This is a large release that adds many features useful for

the inversion of seismic data, particularly for land and near-surface

applications.

SalvusFlow

The observed and synthetic data can now optionally be downsampled before the

misfit and adjoint source are computed. The adjoint source is later upsampled

again and scaled correctly. Useful for expensive misfit functionals.

It can be used either at the event misfit level:

event_misfit = EventMisfit( ..., # Optionally downsample to the given number of npts. max_samples_for_misfit_computation=max_samples_for_misfit_computation )

Or at the misfit configuration level within SalvusProject:

mc = MisfitConfiguration( ..., # Optionally downsample to the given number of npts. max_samples_for_misfit_computation=max_samples_for_misfit_computation

SalvusMesh

Fix a bug that caused the mesh widget to crash for fields with only negative

values.

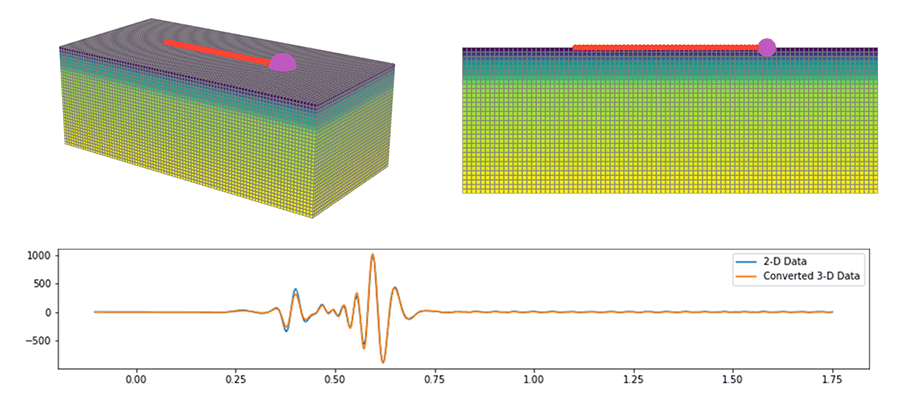

SalvusModules

Added comprehensive support for converting data from a point source to an

equivalent line source which is necessary for example when using 3-D observed

data in 2-D inversions.

We added a variety of different transform, each applicable in different use

cases. Please have a look at the documentation for details.

from salvus.modules.near_surface.processing import convert_point_to_line_source new_st = convert_point_to_line_source( st=st, source_coordinates=[22.0, 1.0], receiver_coordinates=[132.0, 0.0], transform_type="single_velocity_exact", velocity_m_s=550.0 )

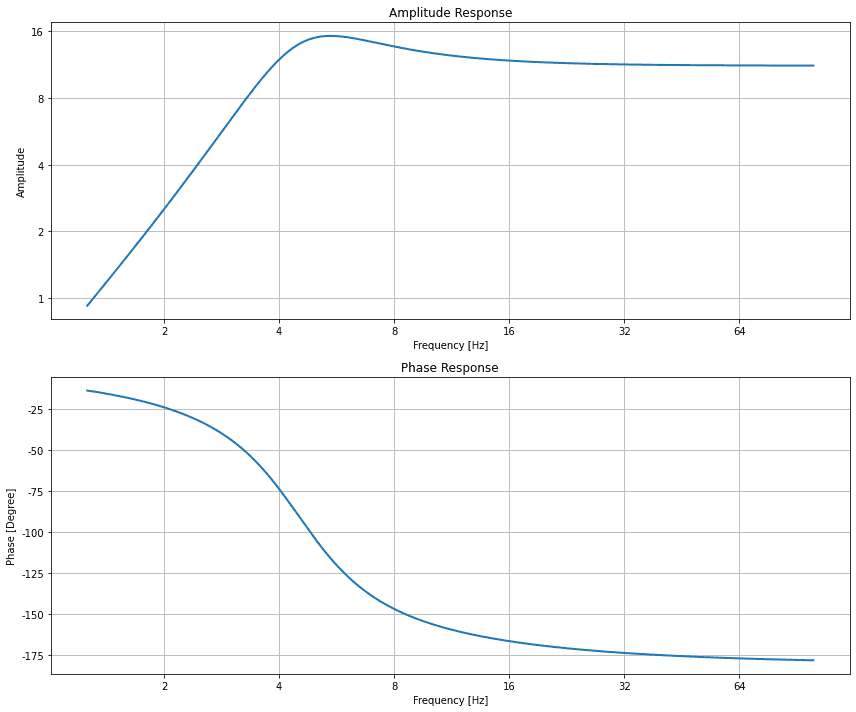

SalvusModules

Added utility functions to compute, remove, and plot geophone responses.

import numpy as np from salvus.modules.near_surface.processing import geophone_response frequencies = np.logspace(0.1, 2, 2000) response = geophone_response.compute_geophone_response( frequencies=frequencies, geophone_frequency=4.5, damping_ratio=0.4, calibration_factor=11.2, ) geophone_response.plot_response(frequencies=frequencies, response=response)

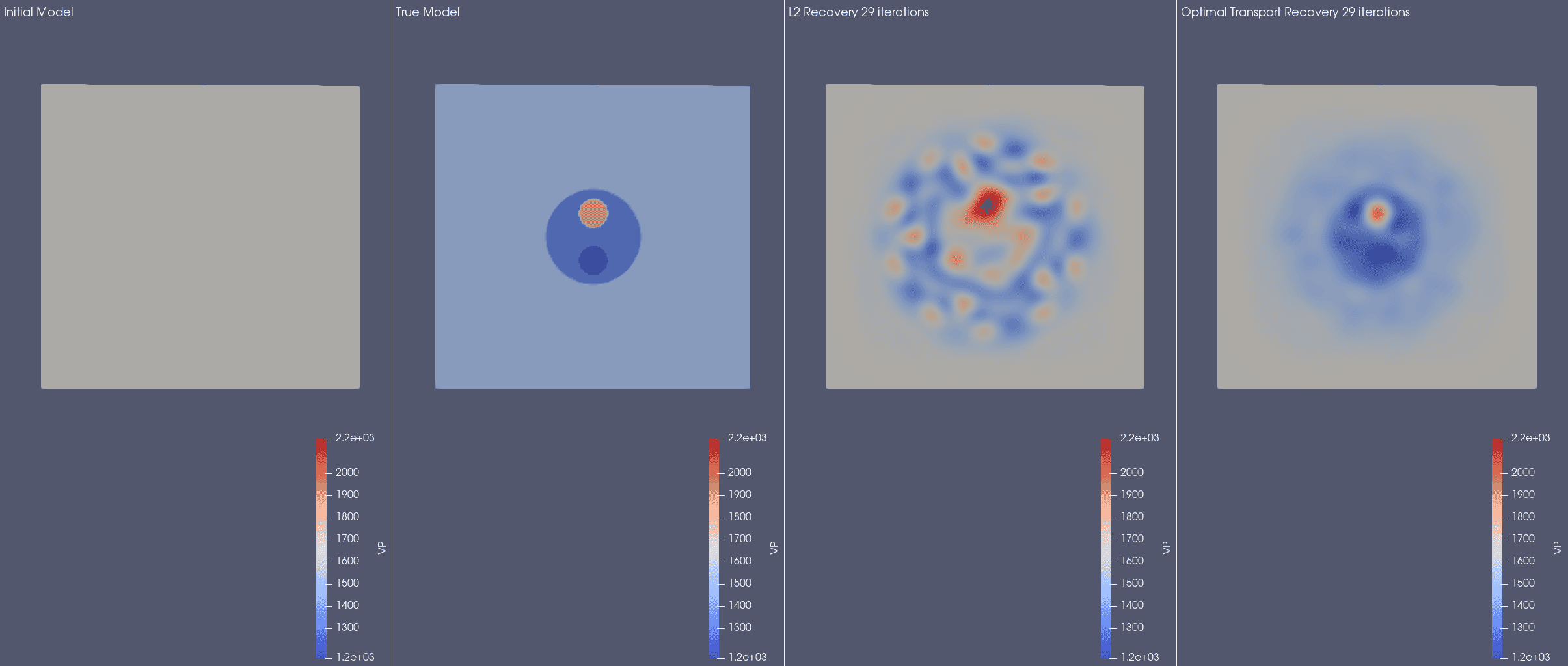

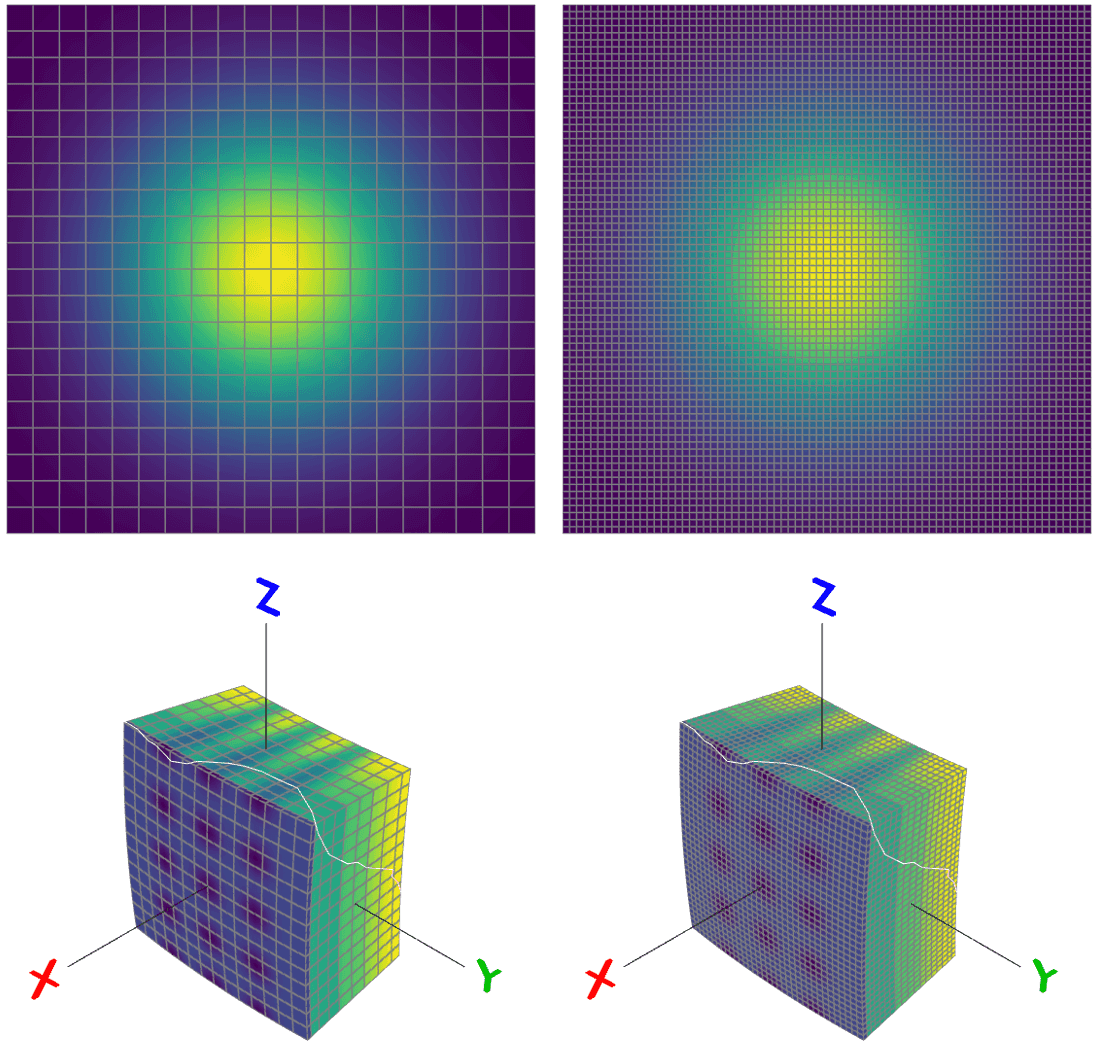

SalvusOpt

Added an implementation of a graph space optimal transport misfit measure as

introduced in this paper:

L. Métivier, R. Brossier, Q. Mérigot, and E. Oudet. 2019."A graph space optimal transport distance as a generalization of Lp distances: application to a seismic imaging inverse problem"Inverse Problems, Volume 35, Number 8, https://doi.org/10.1088/1361-6420/ab206f

You can use it by choosing

"graph_space_optimal_tranport" everywhere Salvus

accepts misfit functionals. The one tuning parameters is the

"max_expected_time_shift" which, as the name implies, should be set to the

maximum expected time shift in seconds for individual wiggles between observed

and synthetic data.For many problems this can get completely avoid the cycle skipping problem.

This, admittedly manufactured, synthetic inversion example demonstrates the

potential gains:

SalvusProject

The function serialization can now serialize numpy arrays and dictionaries as

closure values.

SalvusProject

Added the ability to use processing fragments to more easily perform some

simple and common processing techniques.

To for example bandpass and normalize some data use

"EXTERNAL_DATA:raw_data | bandpass(1.0, 2.0) | normalize" as the data name.Available processing fragments:

time_shift({SHIFT})normalizescale({FACTOR})flipbandpass({FREQ_MIN}, {FREQ_MAX}[, zerophase][, corners={CORNERS}])

SalvusProject

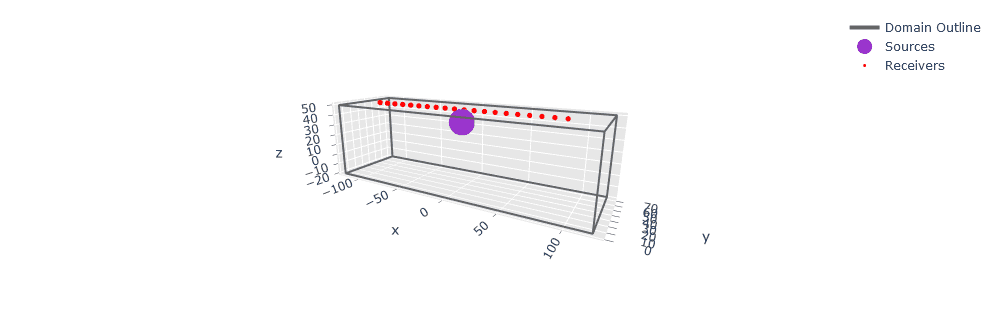

Added an automatic domain preview visualization for 3-D box domains.

SalvusProject

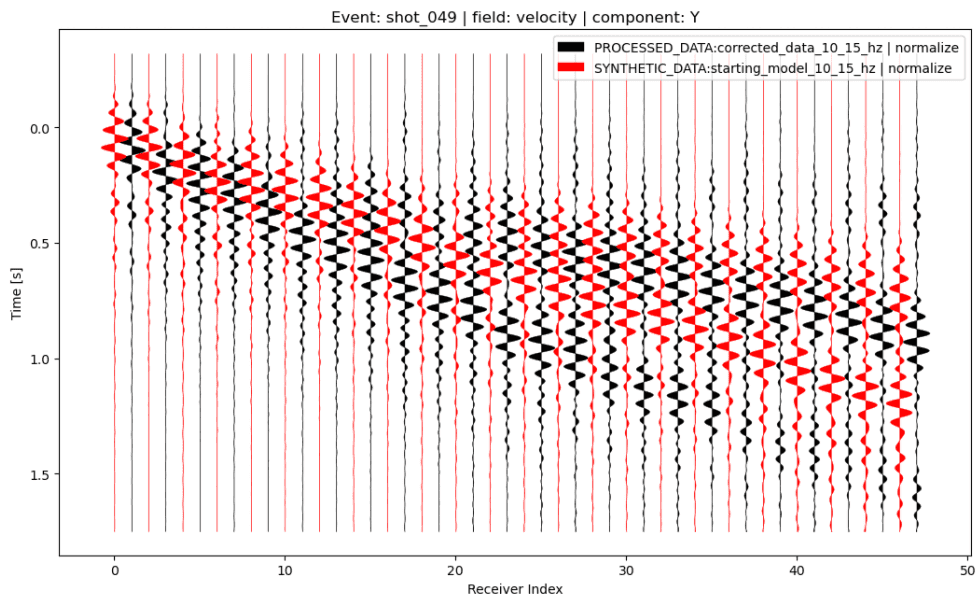

Added an improved shotgather visualization. Amongst other things, it can now

plot interleaved data from multiple traces and sort the receivers with a lambda

function:

p.viz.shotgather( data=[ "PROCESSED_DATA:corrected_data_10_15_hz | normalize", "SYNTHETIC_DATA:starting_model_10_15_hz | normalize", ], event="shot_049", receiver_field="velocity", component="Y", sort_by=lambda r: r.location[0] )

SalvusProject

Allow for the passing of an interpolation mode to the

mesh_from_xarray

function in the Salvus Toolbox. This allows one to override the default spline

interpolation routine, which may be useful in the presence of strong velocity

contrasts.Salvus version 0.11.40

Released: 2021-09-25

This release contains improvements to the mesh-to-mesh

interpolation implementation, as well as a few fixes and quality-of-life

improvements to SalvusProject functionality and SalvusFlow database handling.

SalvusFlow

All input files are stored in Salvus' internal database. From now on these are

compressed which can safe a lot of space for input files with large number of

receivers. Existing databases will be automatically migrated to the new

structure.

SalvusMesh

Improve the mesh-to-mesh interpolation algorithm by allowing element set to be

further restricted in both the source and destination meshes. This is useful,

for instance, when interpolating between meshes with oceans. Also improved the

heuristic which decided which element to source parameters from if an element

was outside of the mesh or outside of a certain layer set. This should improve

the algorithm's stability in such cases.

SalvusProject

The domain map plots for spherical chunk and UTM domains now automatically zoom

to fit upon loading.

SalvusProject

Less assumptions in the internal layout of AppEEARS DEM files so Salvus now

works with older and newer AppEEARS files.

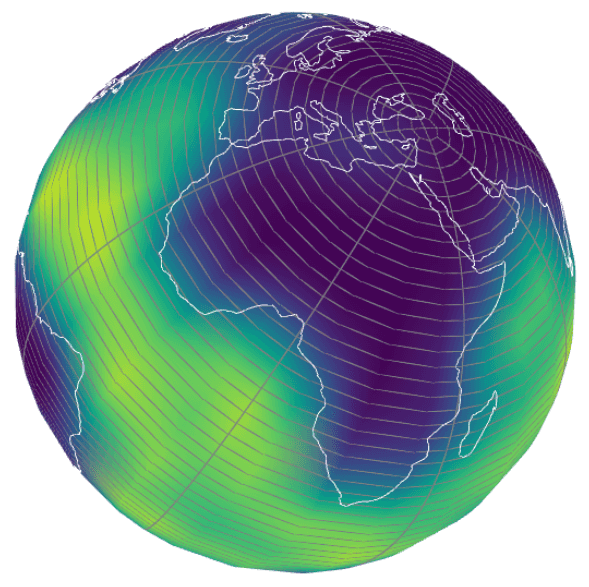

Salvus version 0.11.39

Released: 2021-09-09

This is a minor release that fixes a bug when applying

anisotropic refinements to the crust in a global domain, and reduces the memory

usage of some SalvusFlow routines.

SalvusFlow

Improve detection of duplicated mesh files when transferring data of JobArrays

to remote sites.

SalvusFlow

Reduce memory usage of

simple_config.Waveform objects by lazy evaluation of

UnstructuredMesh objects.SalvusMesh

Fix a bug where an undefined reference to a refinement skeleton may be deleted

before it was defined.

Salvus version 0.11.38

Released: 2021-08-11

Minor update with some utilities to inspect the contents of a project and

to free up space during an inversion by deleting disposable files.

SalvusProject

Add utility to compress the storage requirements of an

inversion by deleting files which are no longer needed

and/or can be recomputed.

Example:

p.inversions.delete_disposable_files( inverse_problem_configuration="my_inversion", data_to_remove=["auxiliary", "waveforms", "gradients"] )

Note that data from the initial model and unfinished iterations

will be kept. It is recommend to remove files in the order

auxiliary, waveforms, gradients, because gradients are the most

expensive to recompute.SalvusProject

Add utility to delete simulation results (waveforms / gradients).

Example:

# Delete waveforms p.simulations.delete_results( simulation_configuration="my_sim_config", events=["event"] ) # Delete gradients p.simulations.delete_results( simulation_configuration="my_sim_config", misfit_configuration="my_misfit_config", events=p.events.list() )

SalvusProject

Add utility to list a project's contents.

This function will be called automatically when a project is loaded.

Salvus version 0.11.37

Released: 2021-07-15

This is a small maintenance /bugfix release that primarily serves to activate

2-D fully anisotropic elastic simulations on CUDA-capable GPUs.

SalvusCompute

Activate 2-D fully anisotropic simulations on CUDA capable GPUs, and fixes some

bugs in the associated gradient computations. No API changes are required from

users -- simply feel free to now run 2-D anisotropic simulations and inversions

on a GPU-enabled site!

Salvus version 0.11.36

Released: 2021-07-02

This release adds more flexibility for handling side-set extrusion and

topography in 2-D, as well as a more robust implementation of processing

function serialization. Additionally, the minimum supported CUDA Compute

Capability has been lowered to 3.5, and as such Kepler-based NVIDIA GPUs can

now be used.

SalvusCompute

Previously, the lowest CUDA compute capability supported by Salvus was 6.0, due

to the unavailability of a double precision atomic add function in previous CCs.

A workaround is now implemented, which should allow Salvus to run on GPUs of CC

3.5 and greater. Note that NVIDIA will soon deprecate architectures < 6.0, so at

some point in the future new Salvus versions will again only support CC 6.0 and

greater.

SalvusFlow

Add initial support for the GridEngine job queueing system.

There are a number of different versions of GridEngine out there and minor

adjustments might be necessary for Salvus to run on them. Please let us know if

you encounter some.

SalvusMesh

Add a utility to extrude meshes across side sets in 2D.

The functionality is currently limited to Cartesian meshes and

side sets aligned with a coordinate axis.

Example:

mesh = basic_mesh.CartesianHomogeneousAcoustic2D( vp=1500.0, rho=1000.0, x_max=1.0, y_max=0.5, max_frequency=1e3, ).create_mesh() mesh = mesh.extrude_side_set_2D( side_set="x1", offsets=np.array([0.0, 2.0, 4.0]), direction="x", )

SalvusMesh

More general definition of 2D dem objects for non-spherical models.

Now, y0 can be set to

-np.infty to deform all elements in y direction

with negative y coordinates.Example:

mesh = basic_mesh.CartesianHomogeneousAcoustic2D( vp=1500.0, rho=1000.0, x_max=1.0, y_max=2.0, max_frequency=10e3 ).create_mesh() mesh.points[:, 1] -= 0.5 mesh.add_dem_2D( x=np.array([0.0, 1.0]), dem=np.array([-0.25, 0.25]), y0=-np.infty, y1=np.infty, kx=1, ky=1, ) mesh.apply_dem()

SalvusProject

SalvusProject sometimes has to serialize Python functions to disc (e.g. misfits,

processing functions, ...).

This now works with more functions, like functions that call other custom

functions, closure values within with statements and a few other things.

In general it should just be more reliable.

Salvus version 0.11.35